YOLO(You Only Look Once)는 딥러닝을 이용한 사물 인식 프레임워크다. 많은 인기 탓에 다양한 버전들(v3, v4, v5...)이 생겨나고 있다. 내가 사용할 예제의 버전은 YOLOv5이다.

깃허브

https://github.com/ultralytics/yolov5/wiki/Train-Custom-Data

Train Custom Data

YOLOv5 🚀 in PyTorch > ONNX > CoreML > TFLite. Contribute to ultralytics/yolov5 development by creating an account on GitHub.

github.com

위 깃허브 페이지를 바탕으로 따라 하였다.

Roboflow - 커스텀 데이터셋 만들기

Sign in to Roboflow

Even if you're not a machine learning expert, you can use Roboflow train a custom, state-of-the-art computer vision model on your own data.

app.roboflow.com

Roboflow에선 여러 이미지를 직접 마우스로 라벨링을 하여 나만의 데이터셋을 만들 수가 있다.

물론 다른 사람이 미리 만든 데이터셋을 다운로드할 수도 있음

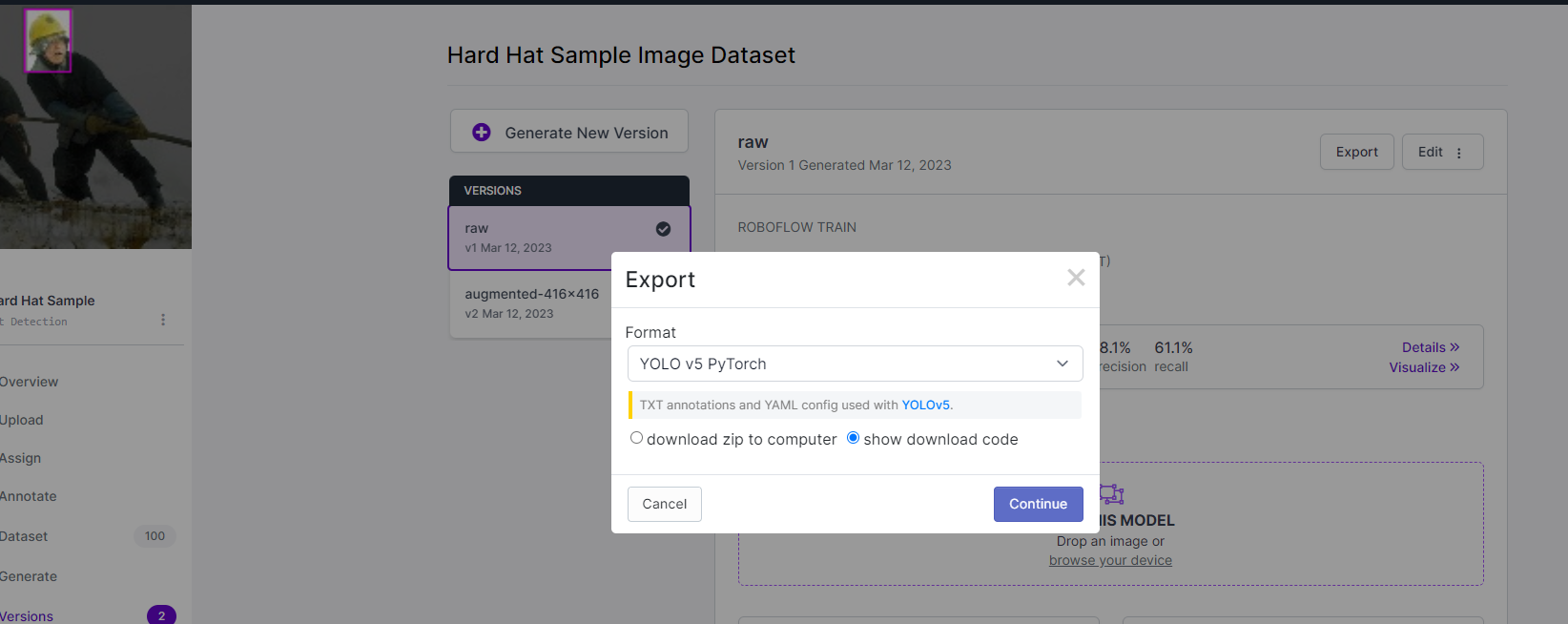

생성을 한 데이터셋은 Export 버튼을 눌러 포맷을 YOLO v5 PyTorch로 지정해야 한다.

Colab 예제 노트(YOLOv5)

YOLOv5-Custom-Training.ipynb

Run, share, and edit Python notebooks

colab.research.google.com

* 노트 중간에 경로 문제가 있어서 약간의 수정이 필요하였다.

트레이닝(train.py)

!python train.py --img 416 --batch 16 --epochs 150 --data data.yaml --weights yolov5s.pt --cacheYOLOv5 모델을 학습시키기 위한 명령어이다.

--img 416 : 입력 이미지의 크기를 416x416 픽셀로 설정합니다.

--batch 16 : 학습할 때 사용하는 배치 크기를 16으로 설정합니다.

--epochs 150 : 전체 데이터셋을 학습하는 횟수를 150으로 설정합니다.

--data data.yaml : 학습에 사용할 데이터셋을 지정합니다.

--weights yolov5s.pt : 미리 학습된 YOLOv5 모델 가중치 파일을 지정합니다. 이전에 학습한 모델을 로드하거나, YOLOv5 모델의 크기를 지정합니다(yolov5s.pt, yolov5m.pt, yolov5l.pt, yolov5x.pt).

--cache : 학습 과정에서 사용되는 캐시를 저장하고 재사용합니다. 이를 통해 학습 속도를 높일 수 있습니다.

감지(detect.py)

!python detect.py --weights /content/yolov5/runs/train/exp/weights/best.pt --img 416 --conf 0.1 --source /content/yolov5/test/images위의 트레니잉을 다 마치게 되면 /content/yolov5/runs/train/exp/weights/best.pt라는 최종 모델이 생성이 된다. (.pt는 파이토치의 모델 확장자)

--source 인자로는 테스트를 시켜줄 원본 이미지들의 폴더 경로를 입력한다.

감지 결과 확인

import glob

from IPython.display import Image, display

for imageName in glob.glob('/content/yolov5/runs/detect/exp/*.jpg'): #assuming JPG

display(Image(filename=imageName))

print("\n")감지가 완료 되었다면 /content/yolov5/runs/detect/exp/ 폴더(exp2, exp3로 증가하기도 한다. ) 안에는 출력된 이미지가 있으니 파이썬 스크립트로 출력된 이미지들을 확인한다.

pypi - yolov5 모듈로 실행

!pip install yolov5(yolov5 모듈 추가 설치)

https://pypi.org/project/yolov5/

yolov5

Packaged version of the Yolov5 object detector

pypi.org

또 간단히 yolov5 모듈을 설치해 .pt 모델 파일을 불러와 설정 값을 내 맘대로 조절하거나 이미지 출력을 cv2로 편집해서 나오게끔 수정하는 등 다양한 커스터마이징이 가능하다.

import yolov5

import cv2

from IPython.display import display, Image

model = yolov5.load('/content/yolov5/runs/train/exp2/weights/best.pt')

# set model parameters

model.conf = 0.25 # NMS confidence threshold

model.iou = 0.45 # NMS IoU threshold

model.agnostic = False # NMS class-agnostic

model.multi_label = False # NMS multiple labels per box

model.max_det = 1000 # maximum number of detections per image

# set image

img = '/content/image.jpg'

# perform inference

results = model(img)

# inference with larger input size

results = model(img, size=1280)

# inference with test time augmentation

results = model(img, augment=True)

# parse results

predictions = results.pred[0]

boxes = predictions[:, :4] # x1, y1, x2, y2

scores = predictions[:, 4]

categories = predictions[:, 5]

# 이미지에 경계 상자 그리기

img = cv2.imread(img)

for box, score, label in zip(boxes, scores, categories):

x1, y1, x2, y2 = box.int().tolist()

cv2.rectangle(img, (x1, y1), (x2, y2), (0, 0, 255), 2)

cv2.putText(img, str(round(score.item(), 2) * 100) + "%", (x1, y1-10), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

display(Image(data=cv2.imencode('.png', img)[1]))

# show detection bounding boxes on image

# results.show()

연습용으로 만든 얼굴 인식 코랩 노트북

https://colab.research.google.com/drive/1GdaLVQF9XJuGUDdo21uTxVvXkv6oIPwe

Google Colaboratory Notebook

Run, share, and edit Python notebooks

colab.research.google.com

HackLog

HackLog